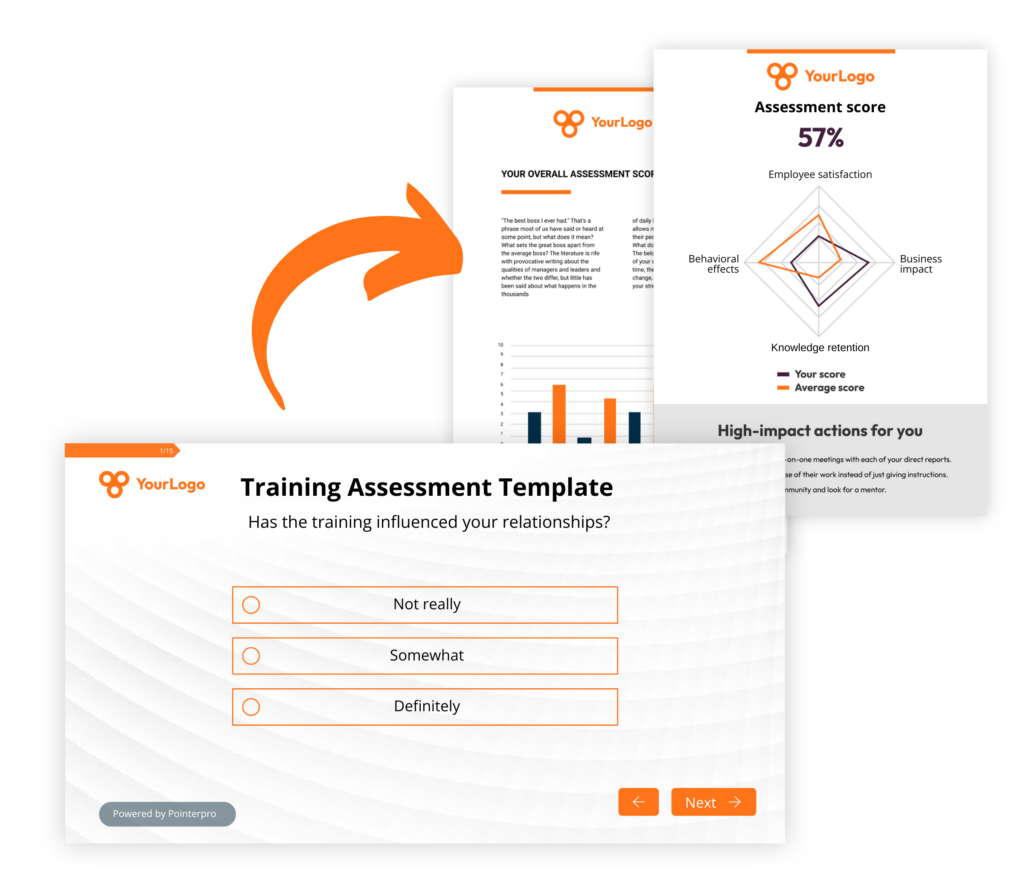

Training assessment template

Get more out of your L&D and employee training initiatives by putting in place an objective training assessment template.

How? By building one that automatically returns actionable feedback in PDF reports.

Pointerpro is the 2-in-1 software that combines assessment building with personalized PDF report generation.

What is a training assessment?

A training assessment is a systematic evaluation of the effectiveness, efficiency, and ultimately the impact of a training program. It aims to determine whether predefined objectives of a training initiative are met. Typically a training assessment focuses on evaluating one or more of the following:

- Learning outcomes: Assessing whether participants have acquired the knowledge, skills, and abilities that the training was designed to impart.

- Behavioral changes: Determining if the training has led to changes in behavior or performance in the workplace.

- Effect on business goals: Evaluating the extent to which the training has contributed to achieving organizational objectives and business goals.

As part of a training assessment, higher management may additionally expect an objective evaluation of the return on investment (ROI) by comparing the costs of the training program to the benefits gained in terms of improved performance and productivity. Generally, a training assessment template is designed to analyze the collected data to draw conclusions and make recommendations for future training improvements in a report.

3 reasons to use Pointerpro as a training assessment tool

3 reasons to use Pointerpro as a training assessment tool

Interactive user experience

With the Questionnaire Builder, you get to create an engaging assessment. How? With numerous design and layout options, useful widgets, and countless question types.

Refined, score-based analysis

Our custom scoring engine helps you quantify and categorize diverse answers. The result? An objective and nuanced assessment of your respondents’ training feedback.

Automated feedback in PDF

Thanks to your setup in the Report Builder, respondents instantly get a detailed PDF report: with personalized responses, useful information, and your brand design.

1.500+ businesses worldwide build assessments with Pointerpro

5 very effective training assessment types and methods

The training assessment template we’ll focus on further – and after all, the most widespread training assessment method – is the questionnaire-based assessment.

Questionnaires are widely used tools for collecting both quantitative and qualitative data from training participants. They typically include a mix of closed-ended questions, such as multiple-choice or Likert scale items, and open-ended questions that allow participants to provide detailed feedback.

A well-balanced questionnaire gauges participants’ appreciation of the training, assesses their knowledge retention, and determines the perceived relevance and applicability of the training content. By analyzing the responses, trainers can identify strengths and weaknesses in the training program and make necessary adjustments to improve its effectiveness.

Other methods that can be complementary:

- Interviews: They involve direct, one-on-one or group interactions with training participants to gather in-depth feedback and insights. This method allows for a more nuanced understanding of participants' experiences, opinions, and the impact of the training on their work performance. Interviews can be structured, semi-structured, or unstructured, depending on the goals of the assessment. The conversational nature of interviews helps uncover detailed information that might not be captured through written surveys, providing rich qualitative data that can inform future training improvements and highlight specific areas of success or concern.

- Observations: These involve watching participants as they engage in training activities or perform their job duties post-training. This method allows assessors to evaluate the practical application of newly acquired skills and knowledge in a real-world context. Observations can be structured, with specific criteria and checklists, or unstructured, providing a more general view of behavior and performance. By directly observing participants, trainers can gather objective data on the effectiveness of the training and identify any gaps between training and actual job performance, ensuring that the training translates into tangible workplace improvements.

- Quizzes and tests: These can also be questionnaire-based assessments, but the difference with a typical training assessment template is that the focus is 100% on evaluation of training participants' knowledge retention and understanding of the training material. Quizzes and tests can be administered in various formats, including multiple-choice, true/false, short answer, or essay questions. Conducted either at the end of a training session or periodically throughout the training, quizzes and tests help determine how well participants have grasped the concepts and skills being taught. The results provide immediate feedback to both the participants and the trainers, indicating areas where learners may need additional support or review.

- Simulations and role-playing: Also focused on evaluating on how well participants absorb the knowledge or skills imparted on them during the training sessions. Simulations and role-playing are dynamic assessment methods that create realistic scenarios for participants to practice and demonstrate their skills. These activities mimic real-world challenges. They require participants to apply their knowledge and problem-solving abilities in a controlled environment. Through simulations and role-playing, trainers can assess how well participants handle practical tasks, make decisions, and interact with others. This hands-on approach helps identify strengths and areas for development, ensuring that participants are well-prepared for similar situations in their actual work settings.

Training assessment vs training needs assessment

A training needs assessment (TNA) or training needs survey and a training assessment serve distinct purposes within the training and development lifecycle, though both are crucial for ensuring effective training programs.

A training needs assessment (TNA) is conducted before a training program is designed and implemented. Its primary goal is to identify and analyze the specific training needs within an organization, group, or for an individual. This process helps determine what training is necessary to address gaps in skills, knowledge, and performance. The TNA focuses on aligning training initiatives with organizational goals and objectives, understanding the root causes of performance issues, and prioritizing training needs based on their impact on the organization. Methods used in TNA include surveys, interviews, focus groups, performance reviews, job analysis, and competency assessments. The outcome of a TNA is a comprehensive report that details the specific training requirements, target audience, and recommended training solutions to address identified gaps.

On the other hand, a training assessment evaluates the effectiveness and impact of a training program after it has been delivered. This process measures whether the training met its objectives and how it has influenced participants’ performance. Conducted during and after the implementation of a training program, the training assessment focuses on measuring learning outcomes, evaluating changes in behavior and job performance, assessing participant satisfaction, and determining the return on investment (ROI) of the training. Methods used for training assessment include questionnaires, interviews, quizzes/tests, observations, self-assessments, peer assessments, performance metrics, simulations, case studies, and feedback from supervisors and managers. The outcome is a detailed evaluation of the training’s effectiveness, providing insights into what worked well and areas for improvement, and offering recommendations for future training initiatives.

Key factors for a solid training assessment template: Questionnaire and report

As already mentioned, one ultimate goal of a training assessment is to be able to formulate recommendations for future improvements. The best way to make this formal and inspire action is to create a report. With Pointerpro, you automatically generate reports. Based on a template you create in the Report Builder, you can generate a personalized PDF report for each participant or generate aggregate reports in PDF that take into account response data from all respondents.

Here are some key factors that will contribute to the success of your training assessment template, both for the questionnaire and the report you’d build:

Training assessment questionnaire template:

- Introduction and instructions: Begin with a brief introduction explaining the purpose of the assessment and provide clear instructions on how to complete it. This helps participants understand the importance of their feedback and how it will be utilized to improve future training sessions.

- Participant information: Collect demographic and background information such as name (optional), job title, department, length of time in the role, and prior related training. This data helps contextualize the feedback and identify trends across different participant groups.

- Training content evaluation: Include questions to assess the relevance and quality of the training material. Participants can rate the clarity, comprehensiveness, engagement, and overall relevance of the content to their job, helping to determine the effectiveness of the training materials.

- Trainer evaluation: Evaluate the effectiveness of the trainer by asking participants about the trainer's knowledge, delivery style, engagement, and ability to answer questions. This section provides insights into the trainer's performance and areas for improvement.

- Learning outcomes: Measure what participants have learned by including questions related to the specific skills and knowledge gained during the training. For example, participants might be asked if they feel more confident in using new software tools as a result of the training.

- Application and behavioral change: Determine how participants plan to apply what they've learned by asking about their intended use of the skills and any anticipated changes in behavior. This helps gauge the practical impact of the training on job performance.

- Training environment and logistics: Assess the suitability of the training environment and logistics by gathering feedback on the venue, materials, scheduling, and duration of the training. This information is vital for ensuring a conducive learning environment.

- Overall satisfaction: Gauge overall satisfaction with the training by asking participants to provide an overall rating and any open-ended comments. This section captures the general sentiment towards the training program.

- Suggestions for improvement: Collect ideas for enhancing future training sessions by including open-ended questions for participant suggestions. This section is crucial for continuous improvement based on participant feedback.

- Interactivity, question logic, and scoring: Implement best practices for using interactivity, question logic, and scoring to enhance the assessment experience and data quality. Incorporate interactive elements such as branching questions that adapt based on previous answers, ensuring the assessment is relevant to each participant. Use question logic to streamline the questionnaire, avoiding redundant questions and tailoring follow-up questions based on initial responses. Implement a clear scoring system to quantify feedback, allowing for easier analysis and comparison of results. These practices not only make the questionnaire more engaging for participants but also improve the accuracy and usefulness of the collected data. In the video below, Pointerpro’s Stacy Demes explains the power of question or “survey” logic:

Training assessment report template:

- Executive summary: Provide a high-level overview of the findings, including key takeaways, major strengths, and areas for improvement. This summary allows stakeholders to quickly grasp the main insights from the assessment.

- Participant demographics: Summarize participant information such as departments, roles, and experience levels to contextualize the feedback. Understanding the demographic breakdown helps identify trends and patterns in the responses.

- Detailed findings: Present detailed results from each section of the questionnaire, including quantitative data (average scores, response distributions) in clear, visual charts and qualitative data (common themes in comments). This comprehensive analysis provides a deep understanding of the training's impact.

- Learning outcomes and application: : Analyze the effectiveness of the training in terms of learning and application by evaluating how well participants met the learning objectives and plan to use their new skills. This section highlights the practical benefits of the training.

- Overall satisfaction and suggestions: Summarize general satisfaction with the training and participant suggestions for improvement. This section captures overall sentiment and provides actionable insights for enhancing future training programs.

- Actionable recommendations: Provide clear, actionable recommendations based on the findings. These specific suggestions for improving future training sessions address both content and delivery, ensuring continuous improvement.

How to digitize your own change readiness assessment template for change enablement

Digitizing your own change readiness assessment template involves transforming your paper-based change management model into questions, and ultimately into a digital tool that evaluates answers based on a score-based logic. Here are the key steps:

- Define objectives: Clearly outline the purpose and objectives of your change readiness assessment. Determine the key questions you need to ask to gather relevant data and insights. Also determine the best question logic and the weight you want to attribute to different questions and answer options. Lastly, indicate which questions should be grouped together into sub-categories to measure specific aspects of change readiness (E.g. cultural readiness, technical readiness, knowledge-based readiness…)

- Select a digital platform: Choose a digital platform or tool to host your assessment. Crucial here is to find a digital platform or combination of tools that allow you to streamline advice, based on the outcomes of the assessment. Pointerpro offers these capabilities all in one platform.

- Design the assessment: Create the digital version of your assessment questionnaire. Structure it in a user-friendly format with clear instructions and intuitive navigation. Ensure that the questions align with your objectives and cover all relevant aspects of change readiness.

- Customize question types: Use various question types offered by the digital platform, such as multiple-choice, Likert scale, open-ended, or ranking questions, to capture diverse responses effectively.

- Incorporate logic and branching: Implement logic and branching features to tailor the assessment experience based on respondents' answers. This ensures that participants only see questions relevant to their circumstances, streamlining the process and improving engagement.

- Add multimedia elements: Enhance the assessment by incorporating multimedia elements such as images, videos, or links to additional resources. These can provide context, clarification, or examples related to the questions.

- Test the assessment: Conduct thorough testing of the digital assessment to identify and address any technical issues, ensure functionality across different devices and browsers, and validate the clarity and coherence of the questions.

- Iterate and improve: Once you’ve launched officially, gather feedback on the assessment process and outcomes, and use it to iterate and improve future assessments. Continuously refine your digital change readiness assessment template based on lessons learned and evolving organizational needs.

By following these steps, you’ll have an effective change readiness assessment. One that will enable a more efficient, scalable, and data-driven approach to understanding and managing organizational change.

50 training assessment example questions

Here are 50 training assessment example questions divided into 5 categories:

- 10 training assessment example questions to evaluate DEI training

- 10 training assessment example questions to evaluate sales training

- 10 training assessment example questions to evaluate compliance training

- 10 training assessment example questions to evaluate leadership training

- 10 training assessment example questions to evaluate customer service training

10 training assessment example questions to evaluate DEI training

- How effectively did the DEI training enhance your understanding of diversity and inclusion concepts?

- How well did the training address issues related to unconscious bias?

- To what extent do you feel the DEI training has influenced your behavior towards colleagues?

- How relevant was the content of the DEI training to your daily work environment?

- How engaging were the activities and discussions during the DEI training?

- How confident are you in applying the DEI principles learned in the training?

- How effective were the trainers in addressing and answering questions about DEI topics?

- How well did the DEI training provide practical tools and strategies for promoting inclusion?

- How likely are you to recommend this DEI training to others in your organization?

- How satisfied are you with the overall quality and delivery of the DEI training?

These training assessment template questions are designed to evaluate a training related to improving the DEI posture of the employees in your company. Whether or not you need to organize such a training, you can find out by first distributing a DEI assessment in your organization. Our colleague Stacy gives a biref intro in this video:

10 training assessment example questions to evaluate sales training

- How well did the sales training improve your understanding of effective sales techniques?

- To what extent has the sales training enhanced your ability to close deals?

- How useful were the role-playing exercises in the sales training for practicing sales scenarios?

- How effectively did the sales training cover techniques for handling objections?

- How relevant was the sales training content to your daily sales activities?

- How confident are you in applying the sales strategies learned in the training?

- How clear and understandable was the sales training material provided?

- How effective was the feedback provided during the sales training sessions?

- How engaging were the sales training sessions?

- How likely are you to recommend this sales training to your peers?

These sales training assessment template questions focus on evaluating the impact of the training on participants’ sales skills and techniques. They aim to determine how well the training improved understanding and application of sales strategies, including closing deals and handling objections. The effectiveness of role-playing exercises, clarity of materials, and overall engagement are assessed to measure the practical benefits of the training and the participants’ confidence in applying new skills.

10 training assessment example questions to evaluate compliance training

- How well did the compliance training increase your understanding of relevant regulations and laws?

- To what extent do you feel more confident about complying with industry regulations after the training?

- How effective was the training in clarifying your responsibilities related to compliance?

- How relevant was the compliance training content to your specific job functions?

- How clear was the presentation of compliance-related policies and procedures during the training?

- How useful were the case studies or examples provided in understanding compliance issues?

- How confident are you in applying the compliance knowledge gained from the training to real-world situations?

- How well did the compliance training address potential challenges you might face in your role?

- How effective were the training materials and resources provided for compliance training?

- How satisfied are you with the overall compliance training experience?

These compliance training assessment template questions are crafted to measure how effectively the training increased participants’ understanding of relevant regulations and their confidence in complying with these regulations. The questions address the clarity of training materials, relevance to job functions, and practical application of compliance knowledge. They also evaluate how well the training prepared participants to handle compliance-related challenges and their overall satisfaction with the training experience.

10 training assessment example questions to evaluate leadership training

- How well did the leadership training enhance your leadership skills?

- To what extent do you feel the training improved your ability to manage teams effectively?

- How effective was the training in helping you develop strategic thinking skills?

- How relevant was the leadership training content to your current leadership challenges?

- How confident are you in applying the leadership techniques learned from the training?

- How well did the training address issues related to team motivation and engagement?

- How effective were the interactive elements (e.g., group activities, role-playing) in the leadership training?

- How clear were the goals and objectives of the leadership training?

- How engaging was the leadership training?

- How likely are you to recommend this leadership training to other managers or leaders?

These leadership training assessment template questions evaluate how well the program enhanced participants’ leadership and management skills. They focus on the training’s effectiveness in developing strategic thinking, team management, and motivation techniques. The assessment also considers the relevance of the training content to current leadership challenges, the effectiveness of interactive elements, and overall engagement and satisfaction with the training.

10 training assessment example questions to evaluate customer service training

- How effectively did the customer service training improve your communication skills with customers?

- To what extent has the customer service training helped you resolve customer issues more efficiently?

- How useful were the role-playing exercises in preparing you for real customer interactions?

- How well did the training cover techniques for handling difficult or irate customers?

- How relevant was the training content to your daily customer service tasks?

- How confident are you in applying the customer service strategies learned from the training?

- How clear and understandable were the customer service training materials?

- How effective was the feedback provided during the customer service training sessions?

- How engaging were the customer service training sessions?

- How likely are you to recommend this customer service training to others in your organization?

The customer service training assessment template questions measure the impact of the training on participants’ communication and problem-solving skills with customers. These questions evaluate how well the training improved handling of customer issues, the usefulness of role-playing exercises, and the relevance of the content to daily tasks. The assessment also covers participants’ confidence in applying the training and their satisfaction with the training materials and overall experience.

What Pointerpro clients are saying

Tips on how to create a highly objective training assessment

- Design clear and neutral questions: Ensure that each question is clear, concise, and free from bias. Avoid leading questions that might influence the respondent’s answer. For example, instead of asking, "How much did you enjoy the excellent training session?", ask, "How would you rate your overall satisfaction with the training session?"

- Use standardized answer choices Provide standardized, balanced answer choices to maintain consistency and objectivity in responses. Utilize Likert scales (e.g., Strongly Agree to Strongly Disagree) or numeric rating scales to quantify responses uniformly. Avoid options that might lead respondents toward a particular sentiment.

- Ensure anonymity: Design the questionnaire to allow anonymous responses, which encourages honesty and reduces the risk of biased feedback influenced by fear of repercussions. Anonymity can lead to more accurate and candid answers.

- Pilot test the questionnaire: Try out the questionnaire with a small group of participants before full deployment. This helps identify any ambiguous or biased questions and allows for adjustments to improve clarity and neutrality.

- Balance question types: Use a mix of question types, including multiple-choice, Likert scale, and rating scales, to capture a range of responses while minimizing the potential for bias. This variety helps ensure that responses are comprehensive and objective.

- Avoid double-barreled questions: Ensure each question addresses only one topic at a time. Double-barreled questions, which ask about two different things in one question, can confuse respondents and lead to inaccurate answers. For instance, instead of asking, "How satisfied were you with the content and delivery of the training?", separate it into two questions: one for content and one for delivery.

- Apply consistent scoring methods: Develop and apply a consistent scoring system for analyzing responses. Ensure that all responses are evaluated using the same criteria to maintain objectivity in the results.

- Train evaluators on objectivity or use automated reporting: If any questionnaire input - unscored, qualitative input, is analyzed by a team, ensure that evaluators are trained to interpret the results consistently and objectively. This includes following standardized guidelines for coding and analyzing responses.

- Include validation questions: Incorporate validation questions to check for consistency in responses. These can be designed to measure the reliability of the answers and identify any patterns of inconsistency or bias in the responses.

- Review and revise regularly: Periodically review and update the questionnaire based on feedback and results from previous assessments. Regular revisions help address any potential biases or issues and ensure that the questions remain relevant and objective.

In navigating the complex landscape of organizational change, the most effective approach often involves crafting a tailored model that draws inspiration from collective knowledge and best practices while remaining uniquely aligned with the specific needs and dynamics of the organization or client.

While established change management models offer valuable frameworks and insights, they should serve as guiding principles rather than rigid templates. By customizing a model to fit the organization’s culture, goals, and challenges, leaders can foster a deeper sense of ownership and commitment among stakeholders, ultimately enhancing the likelihood of successful change adoption and sustainable outcomes.

This approach to creating assessments acknowledges the importance of leveraging existing expertise while embracing the individuality of each change initiative, empowering organizations to navigate change with agility, resilience, and purpose.

Create your first training assessment today

You may also be interested in

Recommended reading

How Connections In Mind benefits the community interest through a digital mindset and a longitudinal assessment

The fact that communities benefit from diversity should not be news to anybody. One type of diversity you may not

Vlerick Business School digitalizes entrepreneurship development with Pointerpro [case study]

What do a top-tier international business school based in the capital of Europe and Pointerpro have in common? At the

Attain Global: How to do psychometric tests right and build a cutting-edge international business [case study]

In many countries worldwide, the pursuit of skillful and engaged employees is not so much a war on talent as