The short answer is what we at Pointerpro call: “auto-personalization,” a wholesome combo of automation and personalization. It implies digitizing your assessments (your questionnaires) and reports, and connecting both together. We’ll get into five strategic approaches below. But first let’s lay out the problem more explicitly: the risk behind scaling your assessment methodology.

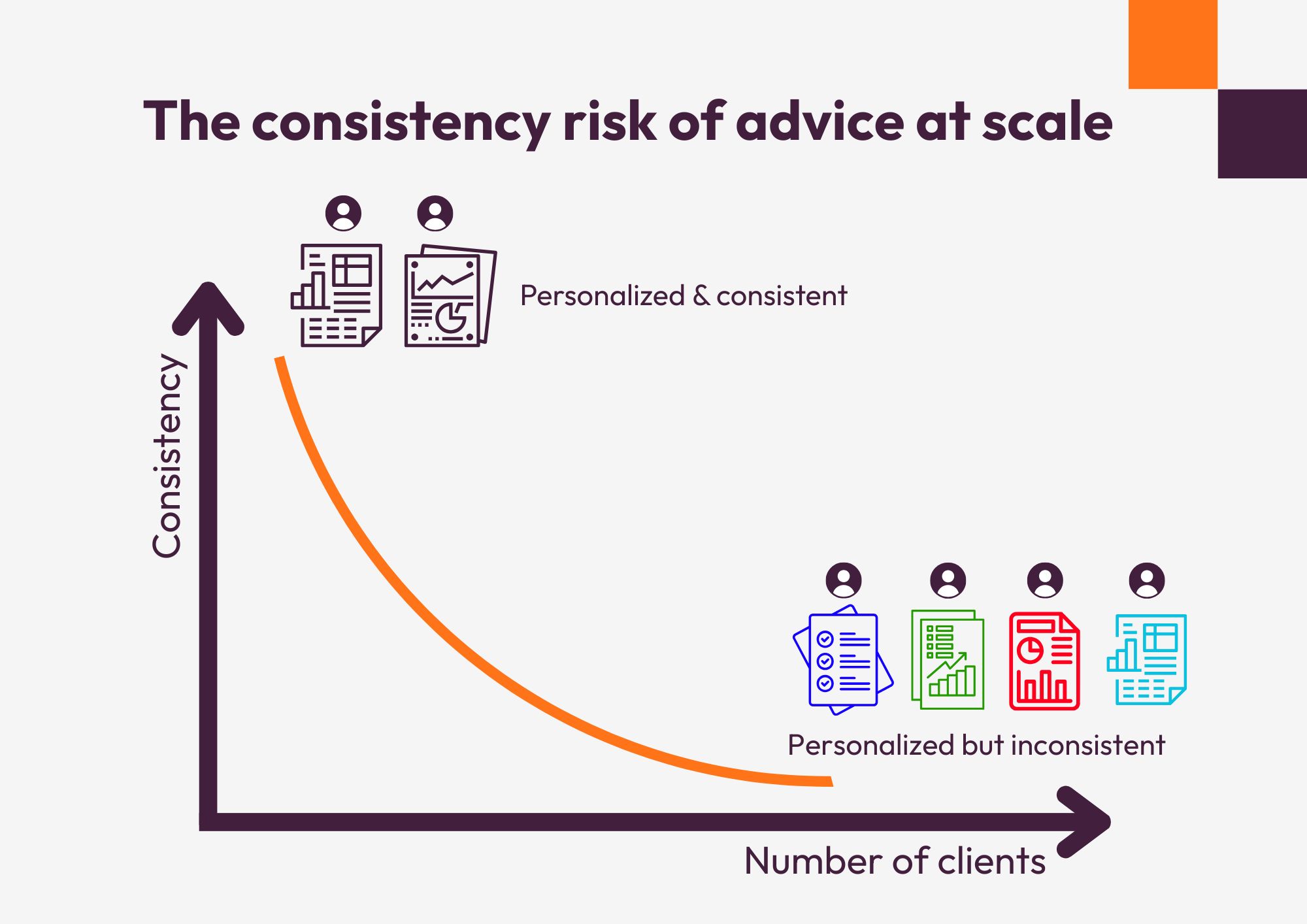

Here’s the dilemma for consultancy and advisory firms in any domain: a manual, one-to-one approach leads to fully personalized feedback for their clients, but at scale the approach becomes unsustainable and the feedback becomes inconsistent.

When different consultants interpret the same data, recommendations vary wildly. Where one expert would call for measures A, B and C, another reports that combining A, D and E is the way to go. The result? Your brand promise becomes unreliable.

Nonetheless, personalization is crucial for success.

At Pointerpro, we see new assessment report templates being built every day. We tend to see three levels of personalization, depending on the use cases (the number of questionnaires and reports users can create is unlimited).

This is where personalization starts: adding the respondent’s name, company, and assessment date. It doesn’t change the substance of the report, but it makes the recipient feel recognized. Think of it as the “minimum viable personalization” if you want to improve response rates.

At this level, explanations and recommendations shift depending on who the respondent is or how they answer isolated key questions.

For example, managers might see more leadership-focused content in a personality assessment report than other participants. This requires planning multiple content paths and is usually manageable when dealing with smaller volumes or niche audiences.

Here’s where true “auto-personalization” happens. The report doesn’t just change based on isolated answers. It dynamically adapts to patterns, scores, and complex response combinations.

The result: highly tailored, actionable recommendations that still remain consistent across large volumes of respondents. This used to require significant technical expertise or countless hours of coding. Today, thanks to some key features for dynamic recommendations, an assessment tool can get you there (at a much lower cost).

I know it sounds very corny but without Pointerpro as an enabler, there is absolutely no way that we could have done what we’ve achieved so far. – Steve Howe (Founder of ResilienceBuilder®)

Steve Howe developed a resilience coaching methodology

and productized it into Pointerpro-built assessments and reports.

Check out the full interview here

The wonderful news is that the level of personalization you can reach, even when delivering assessments and reports at a big scale, is very high. No hiring developers or coding is needed.

So, let’s make it concrete and look at the mechanisms you can use to attain these different levels with Pointerpro.

This approach assigns respondents to predefined categories based on their responses and delivers distinct content for each category. Think of it as creating profiles: each respondent falls into one group, and the report adapts accordingly.

Best fit for personalization level 2: Content adaptation. Outcomes can also be determined by scores, but typically they’re used to adapt content more globally – at the level of sections, narratives, or even the entire report – rather than fine-tuning every paragraph or visual.

Where outcome-based personalization tends to be used for grouping respondents into broad categories, the score-based mechanism personalizes at the level of specific dimensions or competencies.

Rather than assigning someone to a profile, it analyzes their responses in different areas and tailors content accordingly.

Best fit for personalization levels 2–3: From content adaptation to dynamic intelligence. Score-based personalization makes reports more granular and actionable than outcomes, while still being scalable.

While the score-based mechanism looks at individual dimensions in isolation (low, medium, high), the formula-based mechanism goes a step further. It combines multiple variables – often with different weights – into composite or calculated scores. This makes it possible to reflect how different factors interact in real life, instead of treating them as separate silos.

Best fit for personalization level 3: Dynamic intelligence. Unlike outcome-based or score-based mechanisms, formula-based personalization can capture the interaction effect between variables – something a simple “low vs. high” score cannot do.

Try out the embedded security assessment example below to see how only 10 simple questions can result in an already insightful report, with dynamic chart visuals. The textual feedback in a report like this one can be personalized on the same formula calculations.

The hybrid mechanism blends outcomes, scores, and formulas into one layered personalization logic. Instead of relying on just one approach, it lets you stack them so that high-level categories, detailed scores, and nuanced formulas all work together.

This is how you get reports that are both globally consistent and individually precise.

Best fit for personalization level 3: Dynamic intelligence at scale. This mechanism is especially powerful when you’re serving diverse audiences with complex needs, where neither a single outcome nor a single score tells the full story.

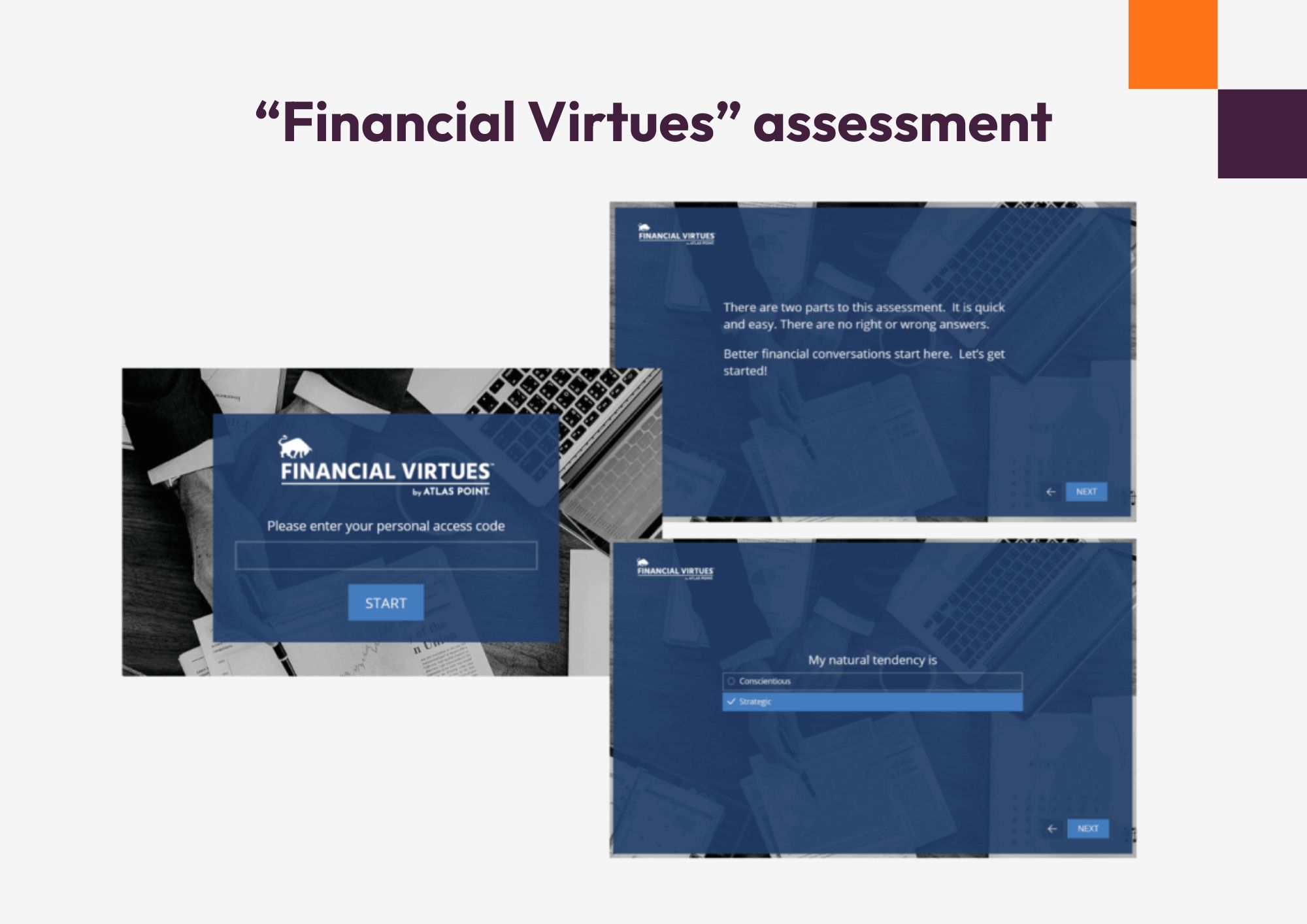

This financial consultancy designed a “financial virtues assessment” with Pointerpro so that financial advisors generate tailored advice and reports based on a respondent’s behavioral profile.

Unlike the other mechanisms, which focus on what kind of content to show (categories, scores, formulas), progressive disclosure is about how much content to reveal at once.

It delivers the most essential insights first, then progressively reveals more detail only when certain criteria are met. This prevents information overload and ensures respondents focus on what matters most.

Best fit for personalization levels 2–3: Content adaptation and dynamic intelligence. Progressive disclosure works especially well for long or complex assessments, where overwhelming respondents with too much information at once would reduce engagement.

In Better Minds at Work’s Human Capital Scan, built with Pointerpro, individual reports show everyone their energy and stress factors upfront, then progressively reveal more detailed visualizations and recommended actions for areas needing attention (e.g. highlighted in red or orange).

At the organizational level, more advanced layers reveal department-level patterns and deeper analytics.

|

Mechanism |

Best fit for personalization level |

How it works (in brief) |

Example in action |

Best when you need… |

|

Outcome-based |

Level 2: Content adaptation |

Respondents are assigned to predefined categories (e.g. profiles). Entire sections of the report adapt to their outcome. |

Profiles like “experienced director” vs. “inexperienced manager” → each sees a different report version. |

A clear, global adaptation of content per profile. |

|

Score-based |

Levels 2–3: From content adaptation to dynamic intelligence |

Question blocks or items are scored; thresholds (low, medium, high) determine which recommendations appear. |

In leadership assessments, low scorers in emotional intelligence get “quick wins,” high scorers get advanced tips. |

Granular tailoring on specific competencies. |

|

Formula-based |

Level 3: Dynamic intelligence |

Multiple scores are combined (sometimes with weights) into composite results; reports adapt based on these calculations. |

Better Minds at Work’s Human Capital Scan combines stress, role clarity, and leadership into wellbeing “battery” visuals. |

Capturing interactions between variables for nuanced advice. |

|

Hybrid multi-layer |

Level 3: Dynamic intelligence at scale |

Combines outcomes, scores, and formulas in layered logic for both global and detailed personalization. |

Atlas Point’s financial virtues assessment blends behavioral outcomes with financial logic to guide advisors. |

Maximum precision for diverse audiences with complex needs. |

|

Progressive disclosure |

Levels 2–3: Content adaptation & dynamic intelligence |

Core insights are shown to everyone; advanced detail is revealed only when conditions are met. |

Better Minds at Work shows energy/stress factors first, then deeper recommendations and team-level analytics. |

Preventing overload while keeping reports engaging and focused. |

We’ve built thousands of variables into this one specific report template. And the rest is history. It works. It’s just perfect! – Riaan van der Merwe (CEO of Attain Global)

Riaan and Attain Global specialize in psychometrics and offer bespoke solutions in 10+ countries in the EMEA.

Check out the full interview here

Want to talk more about personalization?

Even with the right mechanisms in place, it’s possible to misstep when designing personalized assessment reports. Here are the four most common pitfalls we warn Pointerpro users about, when onboarding our platform – and how to avoid them, of course.

It’s easy to think of personalization as something that only happens inside the report. But every interaction your respondent has with your assessment is part of the experience, and an opportunity to reinforce relevance and consistency.

Three areas are often overlooked:

The first and last impression often comes by email: the invitation to take the assessment, the reminders, and the result notifications. With Pointerpro you can:

This ensures that the communication before and after the assessment is just as tailored as the report itself.

Nobody enjoys answering irrelevant questions. That’s why Pointerpro’s question logic feature lets you adapt the path of the questionnaire in real time. You can:

The result is a smoother experience, higher completion rates, and better-quality data – all before the respondent even sees the report.

Right after someone completes the questionnaire, the final screen is their first moment of feedback. With Pointerpro you can:

Think of it as a teaser: it delivers immediate value while building anticipation for the full PDF report that follows.

Scaling your expertise doesn’t mean sacrificing personalization. With the right mechanisms in place, you can deliver reports that are both consistent and truly tailored – and do it all without writing a single line of code.

That’s exactly what Pointerpro was built for: transforming questionnaires into automatically personalized reports that impress clients, boost engagement, and save you countless hours of manual work.

Ready to see how it works in practice? Book a demo with our team and discover how you can start delivering auto-personalized reports at scale.

"We use Pointerpro for all types of surveys and assessments across our global business, and employees love its ease of use and flexible reporting."

Director at Alere

"I give the new report builder 5 stars for its easy of use. Anyone without coding experience can start creating automated personalized reports quickly."

CFO & COO at Egg Science

"You guys have done a great job making this as easy to use as possible and still robust in functionality."

Account Director at Reed Talent Solutions

“It’s a great advantage to have formulas and the possibility for a really thorough analysis. There are hundreds of formulas, but the customer only sees the easy-to-read report. If you’re looking for something like that, it’s really nice to work with Pointerpro.”

Country Manager Netherlands at Better Minds at Work

Yes. Pointerpro connects with platforms like HubSpot, Zapier, and Make, allowing you to automate workflows - for example, sending completed report data into your CRM or triggering follow-up emails.

Absolutely. You can fully brand your reports with your logo, fonts, and colors, ensuring every document looks like it comes straight from your consultancy or company department.

Yes. Many consultancies use assessments as gated content: respondents answer a questionnaire, then immediately receive a personalized report. This creates a valuable exchange and helps convert prospects into qualified leads.

Pointerpro is used by consultants, HR leaders, L&D teams, coaches, and advisory firms that want to scale their expertise by digitizing assessments and delivering automated reports.

Want to know more?

Subscribe to our newsletter and get hand-picked articles directly to your inbox